POMDP与MDP的区别?部分可观测如何理解? - 知乎

对比Belief MDP和普通MDP的贝尔曼最优方程中,可以发现,核心的区别在于Belief MDP里是对观测量求和,MDP则是对状态量求和。 在MDP里面,当前状态是确定的,动作也是确定的,但是下一步的状 …

Real-life examples of Markov Decision Processes

Apr 9, 2015 · I haven't come across any lists as of yet. The most common one I see is chess. Can it be used to predict things? If so what types of things? Can it find patterns amoung infinite amounts of …

为什么一般强化学习要建模成Markov Decision Process(MDP)?有什 …

8 个回答 默认排序 中原一点红 个人理解,希望可以多多交流: 简单结论:MDP是用于形式化 序列决策问题 的一个框架,而强化学习可以理解为是用于求解MDP或者它的扩展形式的一类方法,所以强化 …

machine learning - From Markov Decision Process (MDP) to Semi …

Jun 20, 2016 · Markov Decision Process (MDP) is a mathematical formulation of decision making. An agent is the decision maker. In the reinforcement learning framework, he is the learner or the …

What is the difference between Reinforcement Learning(RL) and …

May 17, 2020 · What is the difference between a Reinforcement Learning (RL) and a Markov Decision Process (MDP)? I believed I understood the principles of both, but now when I need to compare the …

是不是所有的MDP问题都属于强化学习问题? - 知乎

Oct 25, 2022 · 并不是,甚至大部分研究者提到MDP的时候都不是指强化学习,而是“DP”(动态规划),比如《Heuristic Search for Generalized Stochastic Shortest Path MDPs》。强化学习在整 …

MDPI投稿后,pending review状态是编辑还没有看的意思? - 知乎

科普MDPI的pending review和秒拒稿。 所谓pending review,是投稿之后最开始的状态,也就是期刊的助理编辑查看期刊的创新性,相似课题的刊发论文数量,作者的国家及背景等,众所周知,MDPI已经 …

Equivalent definitions of Markov Decision Process

Nov 3, 2020 · I'm currently reading through Sutton's Reinforcement Learning where in Chapter 3 the notion of MDP is defined. What it seems to me the author is saying is that an MDP is completely …

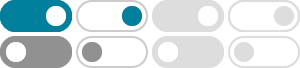

DP接口和mDP接口有区别吗? - 知乎

买的显示器只自带DP转mDP,有必要买一个DP转DP的线吗?mDP口要稍微差点吗?

Mini DP转DP线和普通的Dp线有什么区别吗? - 知乎

Mar 1, 2021 · 只有物理接口的区别,其他部分没有区别。 mini DP 也是可以支持DP 1.4的,可以开启4K 120Hz; 不要听那些人云亦云说mini DP不支持DP 1.4的。 比如NV的 Quadro P620,携带的4个mDP就 …